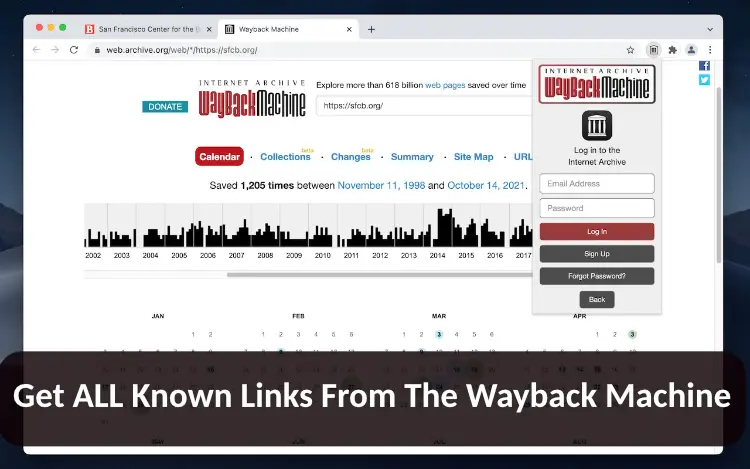

How To Get All The Wayback Machine Links For A Domain

Ever wanted to see everything the WayBack Machine has saved for a domain? Ever wanted to get all of it at once? Here are a couple tools including an entire WayBackMachine OSINT framework giving you everything you need to get everything you want from the archive. Here’s the easy one, waybackurls.py:

Just give it the url you want it to search for and if you DO want it to include subdomains add True at the end.

python3 waybackurls.py example.com true <—with subdomains

python3 waybackurls.py example.com <—without subdomains

It’ll save the results in a nice little json file in the same directory.

import requests

import sys

import json

def waybackurls(host, with_subs):

if with_subs:

url = 'http://web.archive.org/cdx/search/cdx?url=*.%s/*&output=json&fl=original&collapse=urlkey' % host

else:

url = 'http://web.archive.org/cdx/search/cdx?url=%s/*&output=json&fl=original&collapse=urlkey' % host

r = requests.get(url)

results = r.json()

return results[1:]

if __name__ == '__main__':

argc = len(sys.argv)

if argc < 2:

print('Usage:\n\tpython3 waybackurls.py <url> <include_subdomains:optional>')

sys.exit()

host = sys.argv[1]

with_subs = False

if argc > 3:

with_subs = True

urls = waybackurls(host, with_subs)

json_urls = json.dumps(urls)

if urls:

filename = '%s-waybackurls.json' % host

with open(filename, 'w') as f:

f.write(json_urls)

print('[*] Saved results to %s' % filename)

else:

print('[-] Found nothing')

This is the very same wayback script you’ll find in Kali Linux and it originated from a github gist back in 2017 by mhmdiaa

Since then a bunch of variations have sprung up including one written in the go language .

Once you’re ready, there’s actually a whole python package/cli library that’s pretty useful in interacting with all 3 of the API systems that they have for the WayBack Machine. It’s called Waybackpy . It’s got classes built in to work with the SavePageNow/Save API, their CDX Server API and the Availability API. That one’s great because it has built in functions for only grabbing snapshots or the closest saves to a specific date or even the newest vs the oldest recorded archive for a page. You can download that one using pip or conda:

pip install waybackpy -U

conda install -c conda-forge waybackpy

Now that you’re pretty seasoned in using the WayBack Machine, I think it’s time for you to learn just how much information the Wayback Machine actually has inside those archives. It’s more than just html, javascript and images.. it’s a goldmine of data.

Chronos - An OSINT Framework For The Wayback Machine

And then, like a WayBack Machine demi-god looking down on us mortals and feeling a bit generous, mhmdiaa, the very same one who wrote the script featured above, gave us Chronos

. It’s an entire OSINT framework for the Wayback Machine Archives. Written in go it offers not only a lot of fun oportunities but also a whole lot of options to modify the output/parameters to whatever your need might be.

A couple highlighted features:

- Extract endpoints and URLs from archived JavaScript code

chronos -target "spltjs.com/*" -module jsluice -output js_endpoints.json

- Calculate archived favicon hashes

chronos -target "playboy.com/favicon.ico" -module favicon -output favicon_hashes.json

- Extract archived page titles

chronos -target "github.com" -module html -module-config "html.title=//title" -snapshot-interval y -output titles.json

- Extract paths from archived robots.txt files

chronos -target "pornhub.com/robots.txt" -module regex -module-config 'regex.paths=/[^\s]+' -output robots_paths.json

- Extract URLs from archived sitemap.xml files

chronos -target "bestbuy.com/sitemap.xml" -module xml -module-config "xml.urls=//urlset/url/loc" -limit -5 -output sitemap_urls.json

- Extract endpoints from archived API documentation

chronos -target "https://docs.gitlab.com/ee/api/api_resources.html" -module html -module-config 'html.endpoint=//code' -output api_docs_endpoints.json

- Find S3 buckets in archived pages

chronos -target "github.com" -module regex -module-config 'regex.s3=[a-zA-Z0-9-\.\_]+\.s3(?:-[-a-zA-Z0-9]+)?\.amazonaws\.com' -limit -snapshot-interval y -output s3_buckets.json

Modules include:

- regex - Extract regex matches

- jsluice - Extract URLs and endpoints from Javascript code using jsluice

- html - Query HTML documents using Xpath expressions

- xml - Query XML documents using XPath expressions

- favicon - Calculate favicon hashes

- full - Get the full content of snapshots

Command-Line Options:

Usage of chronos:

- target string

- Specify the target URL or domain (supports wildcards)

- list-modules

- List available modules

- module string

- Comma-separated list of modules to run

- module-config value

- Module configuration in the format: module.key=value

- module-config-file string

- Path to the module configuration file

- match-mime string

- Comma-separated list of MIME types to match

- filter-mime string

- Comma-separated list of MIME types to filter out

- match-status string

- Comma-separated list of status codes to match (default “200”)

- filter-status string

- Comma-separated list of status codes to filter out

- from string

- Filter snapshots from a specific date (Format: yyyyMMddhhmmss)

- to string

- Filter snapshots to a specific date (Format: yyyyMMddhhmmss)

- limit string

- Limit the number of snapshots to process (use negative numbers for the newest N snapshots, positive numbers for the oldest N results) (default “-50”)

- snapshot-interval string

- The interval for getting at most one snapshot (possible values: h, d, m, y)

- one-per-url

- Fetch one snapshot only per URL

- threads int

- Number of concurrent threads to use (default 10)

- output string

- Path to the output file