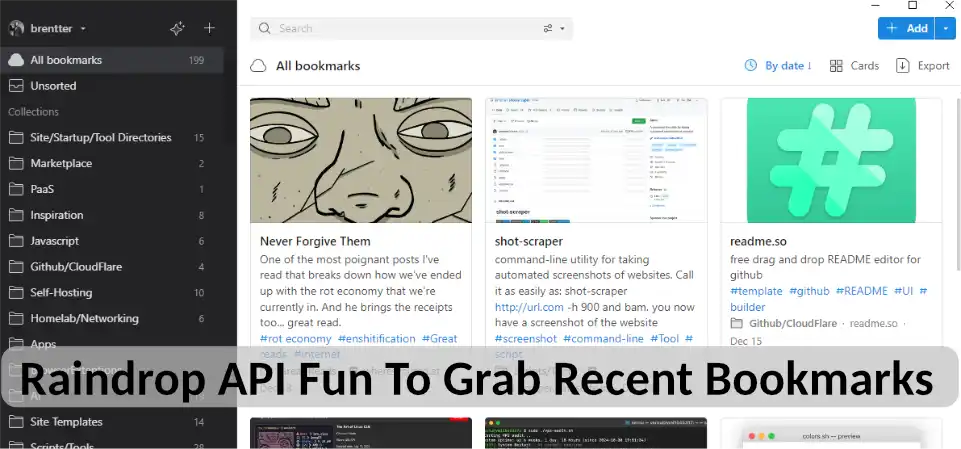

I’ve been trying to consolidate and filter all my old bookmarks but over the past two decades I’ve used many different clients, browsers and other ways to store them so the content is all over the place. A good example is one of the most recent bookmarking apps that I’ve been using has been Raindrop.io. It’s pretty, has incredible search/filtering options, built-in AI support, is available on all platforms and does the job nicely. The only problem is there’s no built-in RSS feed for your entire account - I.e. no recently bookmarked feed. They only provide native RSS feeds for collections or categories that you’ve created (assuming they’re public and not “private” collections). That bird just won’t fly for me so I wrote a quick little script that’ll grab your most recent bookmarks using python. Just scroll to the bottom (or click here ) if you want to skip the whole recipe site experience, otherwise here’s a little more about exporting data from the app.

Raindrop And The Lack Of A Recently Added RSS Feed

The first thing I did was ask in their forum about it and apparently it is something that’s been requested numerous times over the years with the developer giving the same response saying that feature isn’t implemented because of privacy concerns. He goes on to say that each collection that you create has both a public and private RSS feed but the private one has a “uniq cryptic url” (his wording) and if shared will give full read access to that content. It’s not opensource so I have no idea how he’s set up the service but I’d have to assume that changing the method that the developer currently has in place for RSS feeds would be too much of a time-sink to be worthwhile for him. Raindrop has been getting a lot of positive press over the past few years not to mention a massive influx of new customers so why should he spend all that time just for a feature to appease a small subset of users? The developer has mentioned in a few of the RSS-related threads that Raindrop works well with glue-up services like IFTTT but involving another 3rd party service just isn’t what I was looking for either. As my mother would say, “I’m not mad, I’m just disappointed.” Sadly I discovered this problem after I had already signed up as a paid subscriber and then immediately regretted my decision.

My personal opinion on anything that’s online is you can never provide enough RSS feeds or ways to export your own content. If I create something online using your service or application (in this example a bookmarked link with my own tags and comments regarding it), then I should have an easy way to re-purpose and export that content from your site. This goes 20x if I’m paying for the service or have purchased the application. This opens up a much larger issue I will eventually talk about regarding a HUGE problem we’re seeing today with people not realizing that they don’t own anything that’s online unless they’re the ones in charge of it. You never know when company policy could change, the site could go offline, the company could go bankrupt/be sold, or any other of the million situations where the end result is you no longer have access to your data.

Export/Backup Options And The Raindrop.io API

Although Raindrop lacks an RSS feed for recent account activity they do incorporate a few other ways that you can download your data. You’ll need to be a paid subscriber to use these two features but they have a export/backup feature that includes options for it to automatically save regular backups of your account to various cloud services (like Dropbox) as well as an API. I went with the API option and wrote a quick little python wrapper for it. It’s pretty basic but should work with authenticating, reading/editing/deleting raindrops and grabbing collections. If you wish it could work with any of the other API functions feel free to submit a ticket on GitHub and I might add it for you. You can find it here - Github.com/Brentter/Raindrop.io-Python-API-Wrap

I also included in that repository a basic python script to download the most recent 25 bookmarks from your account, remove any unnecessary or redundant data that’s included and then save it locally as a .json file. Back when I put that all together I was doing something similar for a couple of different services with an additional script that would run every few hours to see if any of them needed updating, trigger those scripts then add anything new to a local database. I like keeping functions as separate as possible so they’re easier to change (and scale). This also allowed me to add it to a self-hosted “recently saved content” page that wasn’t reliant on outside sources to use (only to update). For my own projects I’m very much a ‘functionality over design’ kind of guy so I won’t be sharing that part of the project anytime soon (it’s not pretty) however you can easily get similar results using any locally hosted RSS reader.

The Download Recently Added Bookmarks Python Script

Here’s the basic download script using my Raindrop.io API wrapper in python, you’ll need to add your own token from the app to a .env file before running it (full instructions on the git page posted above):

from raindrop_api import RaindropAPI

import requests

import json

import argparse

# You can change the number of results it will pull with -l or --limit - Default is 25

def get_recent_raindrops(api, limit=25):

"""Fetch the most recent raindrops."""

url = f"{api.base_url}/raindrops/0?perpage={limit}&sort=-created"

response = requests.get(url, headers=api.headers)

return response.json()

def filter_raindrops(raindrops):

"""Filter the raindrops to only include specific fields."""

filtered = []

for item in raindrops['items']:

filtered.append({

'link': item.get('link'),

'title': item.get('title'),

'excerpt': item.get('excerpt'),

'cover': item.get('cover'),

'tags': item.get('tags'),

'created': item.get('created')

})

return filtered

def main():

parser = argparse.ArgumentParser(description='Fetch and filter recent raindrops.')

parser.add_argument('-l', '--limit', type=int, default=25, help='Number of recent raindrops to fetch')

args = parser.parse_args()

# Initialize the RaindropAPI

api = RaindropAPI()

# Get the most recent raindrops

recent_raindrops = get_recent_raindrops(api, limit=args.limit)

# Filter the raindrops

filtered_raindrops = filter_raindrops(recent_raindrops)

# Print the filtered raindrops in JSON format

print(json.dumps(filtered_raindrops, indent=4))

# Save the filtered raindrops to a file in JSON format

with open('recentraindrops.json', 'w') as file:

json.dump(filtered_raindrops, file, indent=4)

if __name__ == "__main__":

main()

So when using their API it can give you a bunch of data fields that I didn’t have a use for, hence the quick filtering job. Feel free to use this and the API wrapper for whatever purpose you want. If it’s helpful I’d love to hear about what you made!

Cheers,

Brent